Privacy vs utility: why protecting privacy is not a trade-off

Discover why Image and Video Blurring should be a standard for Computer Vision Application

09 December 2021Ask a question

Introduction

Large and open-access datasets have been a key in the rapid development of machine learning and computer vision techniques. While previously privacy has not been a top priority, growing media exposure on data scandals related to facial recognition and video surveillance are affecting public attitudes around data, putting privacy more and more in the spotlight.

With this article, we want to shed some light on the technical, legal and ethical reasons you should, whenever possible, anonymize faces and license plates for computer vision applications.

Technical Aspect

Among possible methods, blurring emerged as the de-facto standard for anonymization because it offers the best trade-off between performance and reduced distortion. In fact, companies like Google, Microsoft and TomTom are using it.

However, blurring personal data like faces and licence plates might raise concerns among data scientists in terms of the impact on accuracy and reversibility.

Accuracy Impact on Neural Networks

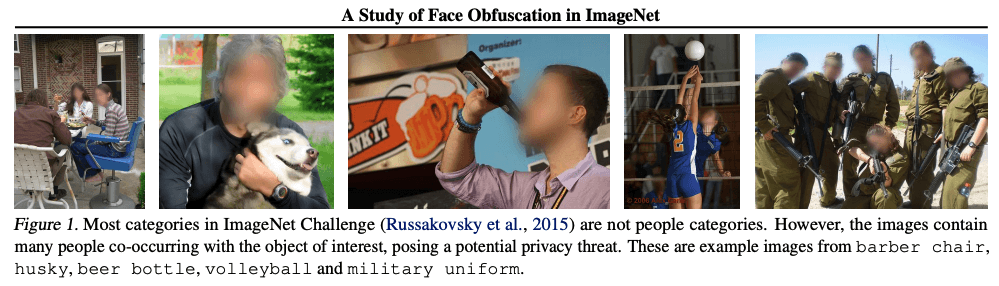

ImageNet’s team tried to answer this. In April 2021, they published a paper where they benchmarked various deep neural networks on face-blurred images to observe its impact, resulting in marginal loss of accuracy (<= 0.68).

Similar studies on portrait matting and body language processing has shown empirical evidence that face blurring has only a minor impact on object detection and recognition models.

Reversibility

As we will extensively explain in the legal aspect, anonymization should be irreversible, i.e. re-identification should not be possible in any circumstances. While it might seem sufficient to apply a blurring effect in Photoshop or similar programs, some of these techniques might be reversible.

When blurring can be reversed with the appropriate key, it’s defined as pseudonymisation, not anonymization.

A stronger layer of security can be achieved by using a non-linear filter (like Celantur Automated Blurring), so that the original data cannot be reconstructed. In contrast, it is theoretically possible to reverse blurring if a linear filter like Gaussian blur is used.

"[...] account should be taken of all objective factors, such as the costs of and the amount of time required

for identification, taking into consideration the available technology at the time of the processing and

technological developments” (Recital 26 GDPR - Definition of anonymization).

In other words, anonymisation does not need to be infallible, but secure enough to withstand reasonably likely means of breaches.

Legal Aspect

Consent vs. Anonymization

When personal data is involved, major privacy laws mandate the explicit content of the people in the dataset to use. In addition the GDPR requires that, within the meaning of data minimization, a justification must be provided that there is no other option than processing of personal data.

Anonymized data — rendered anonymous in such a way that the personal data is not or no longer identifiable — represent a solution to avoid legal complications in accordance with the GDPR and CCPA.

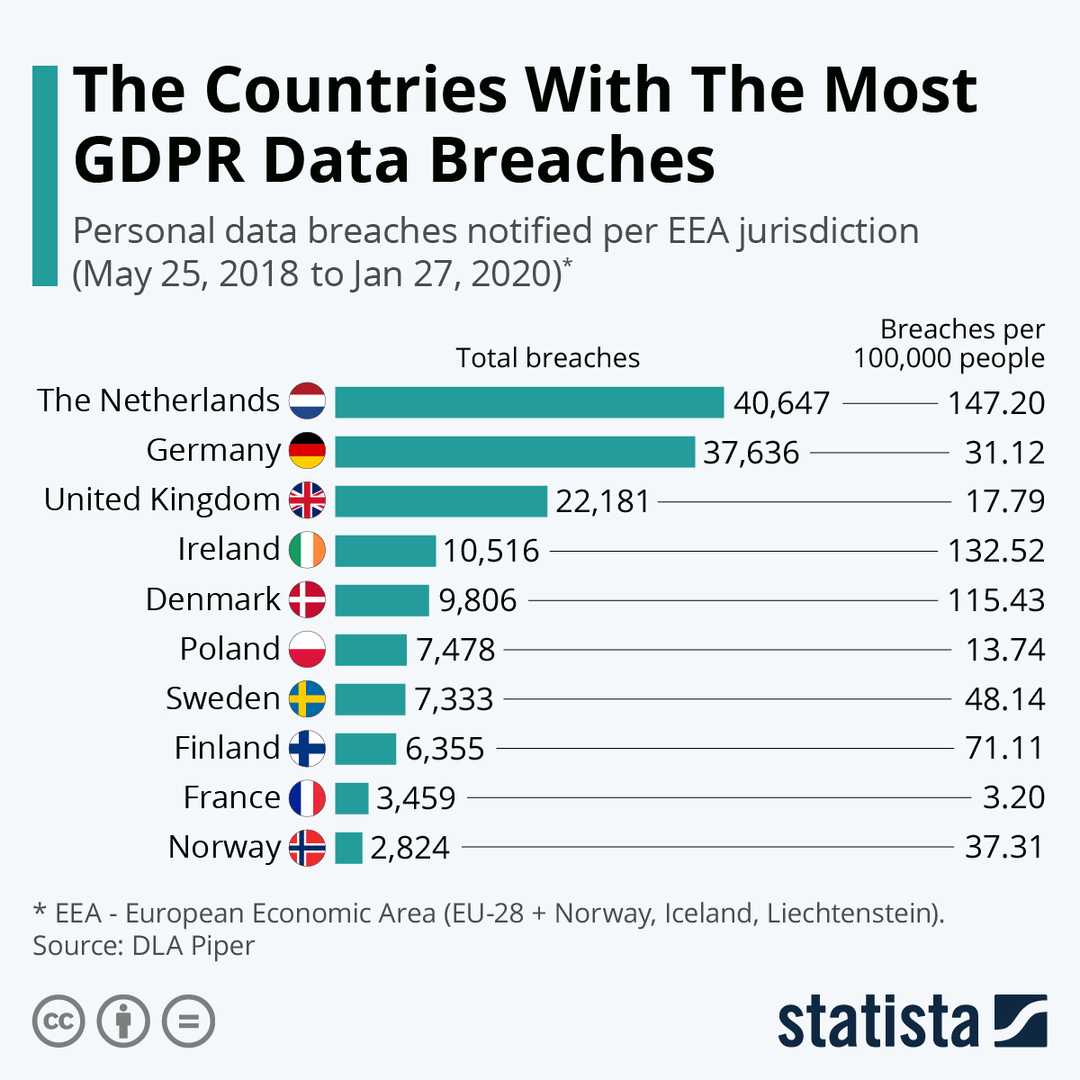

Data Breaches

Another critical issue comes in situations of data breaches of personal data, that could lead to a fine in the event that the DPA (data protection authority) find violations of these articles:

- Art. 32: Security measures such as data minimization, anonymization, etc.

- Art. 33: Delayed notification of a personal data breach to the supervisory authority

So far, more that 300 fines were issued ranging from EUR 200 (homeowners, attorney, etc.) up to 30 millions (British Airways, Marriott).

While violations of the Art. 33 (data breach notification) are up to the data owner or subprocessor, using anonymized data is one of the strongest preventive measure against security breach, since they're not considered personal data anymore.

Ethical Aspect

For years, studies that have collected enormous data sets of images of people’s faces without consent have helped (voluntary or not) commercial or malicious surveillance algorithms. Berlin-based Adam Harvey and Jules LaPlace are curating Exposing.ai - a website that flags privacy-breaching imagery datasets. Some of the biggest ones were scraped from the Internet, like MegaFace - 4.7 million photos from the image-sharing site Flickr - and MSCeleb - 10 million images of nearly 100,000 journalists, musicians and academics.

What would happen if public (like the one mentioned above) or leaked (resulting from a data breach) datasets ends up being used for harmful purposes? As it turns out, some of these were cited already on papers related to malicious projects.

In Summary

Privacy was declared a human right in the Universal Declaration of Human Rights. In fact, Art. 12 states that “No one shall be subjected to arbitrary interference with his/her privacy [...]”.

As we outlined in this article, legal and technical frameworks to achieve privacy-aware computer vision applications are reaching maturity. Scientists are urging to avoid working on unethical projects and companies are withdrawing facial recognition businesses (e.g. IBM, Amazon and Microsoft) or changing their privacy policies (e.g. Twitter).

Ask us Anything. We'll get back to you shortly